In the context of big data, the terms "dataset" and "database" refer to different concepts and have distinct meanings.

A dataset refers to a collection of data, while a database is a software system used to store and manage structured data. Datasets can be stored in databases, but databases can contain multiple datasets along with the necessary infrastructure to manage and manipulate the data.

Dataset: A dataset is a collection of related and structured data that is organized for a specific purpose. It represents a single unit of information that can be analyzed and processed. A dataset can consist of various types of data, such as text, numbers, images, or any other form of digital information. In the context of big data, datasets often refer to large and complex collections of data that are generated from various sources.

Datasets in big data are typically used for analysis, machine learning, and other data-driven tasks. They may include structured data (e.g., from relational databases), semi-structured data (e.g., JSON or XML documents), or unstructured data (e.g., text documents, images, videos). Datasets can be stored and accessed in various formats, such as CSV, JSON, Parquet, or databases.

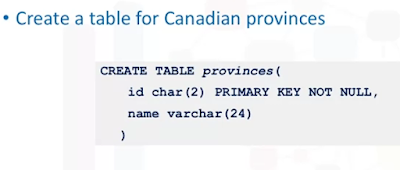

Database: A database, on the other hand, is a software system used to store, manage, and organize structured data. It is a structured collection of data that is organized, indexed, and stored in a manner that allows for efficient retrieval, modification, and querying. Databases provide mechanisms for storing and retrieving data, enforcing data integrity, and supporting data manipulation operations.

Databases in the context of big data can refer to traditional relational databases, such as MySQL, Oracle, or SQL Server, as well as newer types of databases designed for big data processing, like Apache Hadoop, Apache Cassandra, or MongoDB. These big data databases are specifically designed to handle the challenges of storing and processing large volumes of data across distributed systems.

Example:

In this example, the dataset represents a collection of sales data. Each row corresponds to a separate purchase, and the columns represent different attributes of the purchase, such as the customer's name, the item purchased, the price, and the date. The dataset can be further expanded with more records to include a larger set of sales data.

From the above, it looks like a Database Table and Dataset are the same, however they are not. A dataset and a database table are similar in the sense that they both represent structured collections of data. However, there are some differences between the two:

A dataset and a database table are similar in the sense that they both represent structured collections of data. While a database table is a specific construct within a database management system, a dataset is a more general term that can encompass different types of structured data, including tables. Datasets can be more versatile, portable, and independent, while database tables are tightly coupled with the database management system and its specific rules and constraints.

1. Structure: A database table is a specific construct within a database management system (DBMS) that organizes data in rows and columns. Each column represents a specific attribute or field, while each row represents a record or entry in the table. On the other hand, a dataset is a more general term that refers to a collection of related data, which can be organized in various formats and structures, including tables. A dataset can contain multiple tables or other data structures, depending on the context.

2. Scope and Purpose: A database table is primarily used within a database management system to store and manage structured data. It is typically part of a larger database schema that includes multiple tables and relationships between them. The purpose of a database table is to provide a structured storage mechanism for data and enable efficient querying and manipulation operations. A dataset, on the other hand, can have a broader scope and purpose. It can represent a single table or a collection of tables, as well as other types of data such as files, documents, or images. Datasets are often used for analysis, machine learning, or other data-driven tasks, and they may include data from multiple sources or formats.

3. Independence: A database table is tightly linked to a specific database instance and is managed within the database management system. It is subject to the rules and constraints defined by the DBMS, such as data types, integrity constraints, and indexing. In contrast, a dataset can be more independent and portable. It can be stored and accessed in different formats and locations, such as CSV, JSON, Parquet files, or even distributed file systems. Datasets can be shared, transferred, and processed across different systems and tools without being tied to a particular database management system.